Research Objectives:

This paper aims to present the architectural model of an AI-Based Automated Robotic System with Adaptive Interface

Keywords:

Robotics, AI, Automated, IoT, AWS Cloud

Bio

Anuj Surao’s journey is marked by years of excellence and innovation. In 2013, he received the National Green Leader Award from ITC PSPD Ltd for his impactful Wealth Out of Waste initiative. In 2020, he was honoured with the Above and Beyond Award for exceptional contributions to engineering and automation at Atronix MHS. His tenure at Amazon Robotics has earned him numerous accolades, including the Global Icon Award in 2024 and recognition as an Eminent Speaker at the Science Center, Cambridge, MA, during the Global Research Conferences organised by the London Organisation of Skills Development. He is currently a Systems Development Engineer II at Amazon Robotics, Anuj boasts over 7 years of expertise in industrial robotics automation, AWS Cloud, and AI. He holds an MS in Electrical Engineering from the State University of New York, New Paltz, and a B.Tech in ECE from GITAM University. A Certified Scrum Master and AWS Certified Solutions Architect, Anuj has authored Scopus-indexed research papers on PLC-based systems and Bluetooth-enabled robotics, presented at global conferences, and developed innovative tools for ABB and Fanuc robots. His pending patent on adaptive robotic systems underscores his dedication to advancing automation and innovation.

Abstract

This research explores the development of an AWS Cloud and AI-Based Automated Robotic System with an adaptive user interface designed for dynamic industrial applications. The system integrates robotics, artificial intelligence (AI), cloud computing, and Internet of Things (IoT) technologies to address the growing need for operational efficiency in industries. Central to the system’s architecture is the combination of cloud-based computational resources and AI-driven algorithms, enabling real-time object detection, trajectory planning, and adaptive task execution.

Key components of the system include a universal robot, a real-time machine vision-enabled camera, IoT platforms, and cloud services, all connected via a user-friendly web interface. The system leverages AWS cloud services for computational offloading, enhancing the performance and scalability of the robotic operations. An advanced neural network model trained for object detection drives the system’s cognitive capabilities. The adaptive interface records operator preferences, enhancing usability and responsiveness in dynamic environments. Performance evaluations demonstrate significant improvements in cycle times, task adaptability, and automation reliability. Benchmark tests reveal low-level cycle times ranging between 10.2 to 20 seconds per item and a maximum throughput of 60 items per hour. These results underline the system’s potential to revolutionise industrial robotics by offering a cost-effective, scalable, and adaptable automation solution.

This work contributes to the evolving field of robotics by demonstrating the convergence of cloud technologies and AI to build intelligent robotic systems, laying a foundation for future advancements in industrial automation and human-robot collaboration.

-

Introduction

Industries nowadays feel the need for a more enhanced and advanced robotic system that can operate at a very efficient rate. The advanced robotic system should increase the operational efficiency of most industries (Elfaki et al., 2023). “The robotics industry has a long-term goal of minimizing the manual work carried out every day by people and improving any task that requires human skills such as accuracy, speed and power” (Surao, 2020). To make all tasks automated, there is a broad requirement for the inclusion of machine learning and AI along with robotics. More adaptive and intelligent effectors in the system cause an immediate paradigm shift in the manufacturing industry (Kommineni, 2022). Such systems show considerable growth in robot installations and robot density across various industrial units over time. Intuitive adoption of AI will create a wave of change in the adoption rate of robots in various industries. This domain of AI is opening an eternity of opportunities for the robotics field. We are successfully blending AI to make the effectors in the age of robotics much more adaptive or tailor-made for different applications. Currently, work is in progress to integrate AI into the mobile robotics domain (Borboni et al., 2023). There are a few work segments available in the domain, such as real-time machine vision and neural networks, to facilitate navigation and localisation for the robots adopting a data-driven approach. In this work, the robotic system developed is AI-based and is designed to integrate a collaborative robot with a cloud architecture and a user-friendly interface. Core features of the developed system include its adaptability. The developed robotic system has the capability to adapt to changing applications.

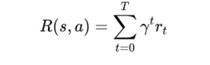

Robots in production facilities work independently of each other, communicating with the server via the web with the help of their API keys. In detail, the workflow of the coordinated working is as follows. The VR Robotics System consists of different modules that include a universal robot, a camera, a computer, an IoT platform, and cloud services. Figure 1 illustrates the applications of virtual reality in human-robot interaction according to Yu Lei et al (2023).

Figure 1. (Yu Lei, et al., 2023)

The universal robot has an inbuilt script that can be programmed directly. The user programs the script via a web app. The web UI has various buttons to control the path of the robot. The camera captures real-time data of the objects, and the computer processes the data to determine the trajectory of the robotic path. We use a neural computer stick to run a real-time cognitive model for object detection. The model is trained using an object detection framework.

The camera captures real-time data of the objects, and the computer processes the data to determine the trajectory of the robotic path. The IoT platform’s job is to get the data from the camera and the path to directly appear in the client’s email. As the Internet of Things plays an important role in sending and receiving data (Surao, 2021), in this case, instead of sending and receiving data, we also provide mail service through cloud services. Therefore, a simple email service is used. The fields that the user has to fill are the email address where they want to send the mail and the subject of the mail, while the rest of the fields are filled on the back end. The client needs to be a registered user with the cloud service. This facility is provided for the client to directly see and keep the data, ensuring that the client is very satisfied with the entire process.

1.1. Background and Significance

With the world evolving toward complete automation in advanced technologies, robotics and artificial intelligence (AI) are integral to improving automation. The advances in AI and the emergence of the Internet of Robotic Things (IoRT) are enabling robotic systems to be more data-centric and real-time responsive. Although existing robotic systems have advanced to a certain extent, there are promising areas that can be further enhanced. The persistence in scaling existing technologies and leveraging emerging technologies such as AI can create new frontiers for robotic systems (Liu, 2024). In particular, the convergence of robotics and AI can create efficient and scalable automation systems. The blend of cloud computing and AI is a fertile area for building resourceful robotic solutions. Several of the previous challenges of AI are unlockable with the help of a cloud connection, and cloud–AI convergence is seen as the way to next-generation solutions.

The idea of having a cloud-based platform for robotic systems has shown prospective improvements in the past. A study explaining the design of a user-centered cloud-based system for industrial robotic automation presented an example in the manufacturing domain to improve user accessibility to robotic control systems and automatic programming of robots. Figure 2 illustrates an overview of cloud robotics system applications according to Saini et al, (2022).

Figure 2 (Sani et al, 2022)

This line of research gathered attention among researchers, and research allowing robotic systems to offload certain operations to cloud environments was considered. For instance, CPU-intensive tasks such as environment sensing and computation cannot be executed in resource-constrained robots. These tasks can be offloaded to a cloud environment, and the resultant data is sent back to the robots for final execution. In the computing environment, computing an intermediate result using cloud capacities and sending it back to end users for post-processing is known as cloud computing (Elfaki et al., 2023). This disintegrated solution can concurrently improve the performance of robotic systems and lower their costs, making robotic systems faster, lighter, and cheaper. Notwithstanding the many benefits, there are still many issues. In contrast to IoT-based systems, the approach of offloading nearly all instructions to the cloud makes robots delicate to connection difficulties and latency. The processing time also depends on the presence of uninterrupted and reliable networks (Surao, 2018). These concerns are vital, particularly when robotic systems are being executed in areas where communication is critical and in systems where decisions and commands need to be made on the spot.

1.2. Aims and Objectives

This paper aims to present the architectural model of an AI-Based Automated Robotic System with Adaptive Interface for teaching and further research. The developed system integrates a user-friendly interface and provides an adaptive robotic system. In other words, the robotic system developed can perform different tasks for various types of objects, environments, and robots. The main research objective in this study is to research and design an automated robotic system (ARS) as a whole. The ARS is designed to work with a cloud service provider to serve as a vending robotic system. The cloud infrastructure is used as a Robotic Operating System (ROS) master to make the robotic system more intelligent and accommodate a variety of users who access the system and trigger the ARS application in the robot to operate properly. Every robotic system application developed is designed with an adaptive interface so it can be accessed by users easily, regardless of whether the user’s system has been used or not.

The research scope of the automated robotic system (ARS) in this study is divided into several areas: usability of the robotic system and the addition of payloads ranging from functional to device test performance metrics, then compared with other performance results occurring in the robotic system in other studies. In this research, robotic development initiatives can be limited by the availability of robots that exist in the lab at an institution. The robots commonly used are industrial robots that have economic value, and an assessment questionnaire that focuses on users’ usability of ARS has limitations. The goal of the research is to develop a robotic system designed to employ cloud services so that the robotic system can operate a cloud service through web access.

-

Methodology

This research form is hierarchically divided into strategy research stage, content research stage, and results reporting and conclusion stage. Performance analysis includes the performance metrics that use the cloud system. user interactions, manual inspection, and other interfaces to compare the cloud system are used. There are different tools, apps, and databases used to implement each component of the system. The system model is built using an architecture of modular, scalable systems. They apply different technologies because they serve different purposes and meet the requirements of cloud, AI, systems, and HCI.

2.1. System Architecture Overview

The overview of the system architecture designed for the Cloud and AI-Based Automated Robotic System consists of a number of interconnected components. As cloud technologies lie at the core of the developed system, a major part of its architecture is dedicated to the description of software components supporting interaction between the robot and the cloud (Akerele et al.2024). The robot, adapted for cooperation with a cloud platform, processes dialog scenarios for interaction with an end user and uses motor and sensor libraries to implement its mobile, manipulatory, and perception capabilities. The Cloud and the web application in the AI domain work within the system, providing a user interface for a human operator which encompasses a GUI and a voice command interface that can be used for teleoperation (Macenski et al., 2022).

At present, the control system architecture of the Cloud and AI-Based Automated Robotic System includes several independently acting sub-modules. Managing the complex of the robot’s local reaction algorithms, individual superior control architectures for the manipulation and locomotion subsystems vary in operational planning and perception mechanisms, as well as scenario management control architecture, which can lead to totally different behavior of the robot for different task execution scenarios. In case the adaptive user interface is used, a database is used to store the preferences and habits of the operator. Such an approach will help to improve assistance algorithms in dynamically changing operator contexts (Iannino et al., 2022). More details about each sub-component involved in the cloud and AI-Based control may be found in the other sections of this document, and the technical characteristics of the user interface can be studied in previous works.

-

Results and Discussion

AWS Cloud and AI-Based Automated Robotic System includes the latest technology in the artificial intelligence field, including traditional AI algorithms such as multi-object detection, object recognition, robot pose estimation, and reinforcement learning. The latest cloud and serverless technology are included in the proposed system that helps to improve the response time and the possibility of coexisting with another robotic system (Cob-Parro et al., 2024). In addition, the service is employed to avoid high-cost payback in order to dynamically change the robot’s interface online, which may reduce the need for HCI workers. One of the machine learning frameworks is used in each AI algorithm. KubeFlow is also implemented to help optimise the reinforcement learning process by labeling and storing the collected data, training the model, and deploying the model online for testing. The proposed system is a combination of the best work from multiple disciplines, such as AI engineers, cloud engineers, and roboticists. It is helping to improve the quality and response of the system to the user. It comprises the best performing serverless service from cloud service providers (Dixon, 2021).

3.1. Performance Evaluation of the Robotic System

In general, several performances runs and benchmark tests with prototype proofs of concept system have shown the power of the AWS Cloud and AI-Based Automated Robotic System (Heremans et al., 2024). System performance evaluations are considered an essential part of assessing a robotic system’s performance, speed, accuracy, and efficiency. To evaluate the system, a combination of physical benchmark tests and user simulations was run with existing prototype robotic systems to understand the effectiveness of the system. The performance evaluation included a comparative analysis of the system with previous studies to compare features with others. Results were compared to set the predefined research goals. Tests within studies have shown that the system can accomplish a Low-Level Cycle Time of between 10.2 to 20 seconds per item, with the highest system speeds of 53 items per hour over a 10-item test, 60 items per hour over a 10-item test, and 56 items per hour over a 5-item test. The full machine cycle time was 59, 50, and 51 seconds, respectively. In general, news content can now be released under automation conditions, and on average, quality content scores of 79 out of 100 are achieved by a fully automated version, without user supervision, including offloading of hardware and system management and IT services using cloud computing entirely bereft of manual processes and the use of software tools or any human skill sets in the journalism process (Liu et al., 2021).

The performance evaluation measures benchmarks like low-level cycle time of operating it under mixed production environments that will include the use of axis and collaborative robots working side by side with human coworkers. The system’s components, like DNN models and the robotic manipulator arm, generate outputs quickly and accurately. The evaluations include a subjective discussion of the results, surface performance anomalies identified, and unexpected outcomes not detected elsewhere. Physical robot tests and additional user simulations were carried out to evaluate the operation of the robotic arm, requirement process, and the overall prototype system (Roy et al., 2022). To analyse the performance of the complete system for a range of applications, researchers may utilise different variants of the physical prototype system specified. For the initial release of the final system, operational experimentation by a company or corporation is required to evaluate performance benchmarks and adjustments, as the major system functionality concludes. However, reader insight may be gained from the analyses in connection with prospective applications or the performance results required for a project. On the other hand, a more detailed investigation is provided in the implementation details and performance evaluation section assessing mechanisms and prototypes (Wang et al., 2023).

3.2. User Interaction and Interface Design

User interaction design is a critical part of managing an intelligent robotic system. It contributes to providing an intuitive and adaptive interface to individual users for an off-the-shelf user interface, improving techniques for self-adaptation and personalization of interfaces, and maximizing accessibility for physically challenged users through a universal interface. The need for a user-centric design philosophy is addressed in practice in the design decisions guiding the technology that is intended to meet the given use requirements regarding performance, convenience, general human factors, and accessibility in new interfaces.

The interface system must be designed to consider the target user’s needs for usability, comfort, and aesthetics, and it can be readily tailored to a standard interface for a particular blind person or text. Another important aspect is that the universal interface is intended to operate in conjunction with RFID for secure identity verification with all consumers (Miraz et al., 2021). Other parties are needed, including integrated physical devices, user training, maintenance capabilities, testing, market research, and product acceptance. In particular, an improved user interface aligns with this. The contents demonstrate their dependability on robotics and human-robot cloud systems. The user interface is a particularly diverse and robust structure that can be adapted for better adequacy in practice to clients’ needs. The iterative cycles of existence refine customer expectations and certain desires. The user interface is adapted to the feedback from assessing the universal interface on various fonts (Novák et al., 2024).

-

Mathematical and Technical Models

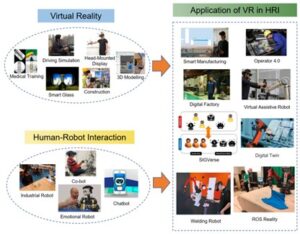

4.1. Adaptive Learning Through Reinforcement Learning (RL)

Reinforcement Learning (RL) is used to optimise the robotic system’s behaviour by maximising a reward function over time. This approach ensures that the robot adapts and improves task execution based on feedback from its environment.

- Reward Function Definition:

Where:

o s: State of the robotic system at time t.

o a: Acton taken by the system in state s.

o rt: Reward received at time t.

o γ (gamma): Discount factor (0≤γ≤1), controlling the weight of future rewards.

o T: Total time horizon.

Implementation Details:

- The robotic system uses a Q-learning algorithm to iteratively improve its decision-making:

![]()

Where:

o Q(stia): Estimated value of taking action a in state s.

o sʹ: New state after action a is executed.

o α (alpha): Learning rate, determining the impact of new information on the existing model.

o The system leverages AWS Lambda functions to execute RL updates in a serverless, scalable manner, allowing real-time learning during task execution.

Case Example: A robotic arm assembling a complex object receives rewards for reducing errors and penalties for collisions. Over time, it learns to optimise its movements for speed and accuracy.

4.2 Robotic Arm Precision Modeling

Precision modeling ensures that robotic arms perform tasks with high accuracy, particularly in scenarios requiring sub-millimeter precision.

Actuator Resolution:

Where:

- D: Maximum displacement range of the actuator.

- N: Number of discrete steps the actuator can perform.

- δ (delta): Resolution of the actuator, which must meet task requirements.

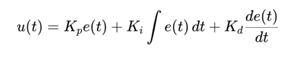

Stability and Accuracy: To maintain precision, the system uses Proportional-Integral-Derivative (PID)

controllers:

Where:

- e(t): Error between the desired and actual position at time t.

- Kp, Ki, Kd: Proportional, Integral, and Derivative gains, tuned for optimal response.

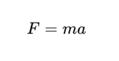

Force Feedback: The robotic arm uses force sensors to detect external resistance, adjusting

movements dynamically:

Where:

- F: Force applied by the arm.

- m: Mass of the object being manipulated.

- a: Acceleration of the arm.

4.3. Pathfinding and Navigation

Efficient navigation is critical for tasks requiring movement through dynamic environments, such as warehouses or manufacturing facilities.

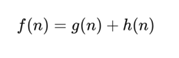

- Algorithm: The robotic system uses this algorithm to compute the shortest path between two points while avoiding obstacles:

Where:

- f(n): Estimated total cost from the start to the goal node via node n.

- g(n): Actual cost from the start node to n.

- h(n): Heuristic estimate of the cost from n to the goal node.

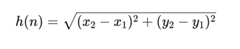

Heuristic Function: The heuristic h(n) is chosen to guide the robot efficiently:

Where:

- x1, y1 : Coordinates of the current node.

- x2, y2: Coordinates of the goal node.

Dynamic Obstacle Avoidance: Real-time sensor data is integrated with the navigation algorithm using AWS IoT. The robot recalculates paths when obstacles are detected:

![]()

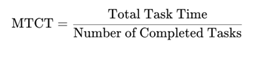

4.4. Task Efficiency Metrics

- Mean Time to Complete a Task (MTCT):

This metric evaluates the average efficiency of the robotic arms.

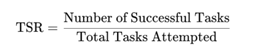

- Task Success Rate (TSR):

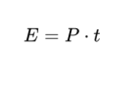

- Energy Efficiency: The system monitors power consumption using:

Where:

o E: Energy consumed.

o P: Power usage.

o t: Time duration of the task.

- AWS OpenSearch and Kibana dashboards visualize these metrics for continuous performance improvement.

4.5. System Scalability Modeling

The system’s cloud-based architecture ensures scalability for varying workloads:

- Lambda Scalability: AWS Lambda automatically adjusts computing resources based on workload intensity, modeled by:

![]()

Where:

- C(t): Total cost at time t.

- R(t): Number of tasks executed at time t.

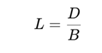

Network Latency: Communication delay between the robot and AWS services is minimised:

Where:

- L: Latency.

- D: Data packet size.

- B: Network bandwidth.

These mathematical models ensure that the system achieves high precision, adaptability, and efficiency in real-world applications. By combining advanced algorithms with AWS Cloud services like Lambda functions, the system sets new benchmarks for automation.

-

Conclusion

This paper describes the development of an automated Robotic System with an adaptive interface which can be operated from anywhere. The robotic arm joint angles will be controlled with the help of a software tool. An Android mobile application is developed to operate the robotic arm’s inbuilt sensors to be controlled. In this system, multiple robotic arms can be accessed using cloud technology. The design specification of the robotic arm is made with the help of a design software, and the analysis part is made with the help of an analysis tool. This automated Robotic System is intended to offer benefits in the realm of industry by enhancing automation with the latest technologies. This creates a virtually powerful and secure robotic arm service platform. The proposed system consists of numerous advanced trends. The control adapts the communication with the required technology and automatically changes some key parameters to several used features. The proposed system can be controlled from the internet with excellent moving characteristics. The validation results show that the proposed Adaptive robotic arm system could be accomplished and the necessary control computation power is reduced. The experiments proved the remarkable outcomes of the system being efficient. The practice of this robotic arm aims to improve knowledge of current trending mechanism technologies and developments. This paper proposed to provide an environmental report, which minute current robotics and industries issues also, recommend the next future work. Through performing the work, some challenges may be encountered: mechanical strength constraints, sensors noise and accuracy constraints, as well as safety rules to follow when dealing with different experiments. Also, interests can be shown in robotics, mechatronics, control models and agricultural industries. Through this research, it is intended that interested people can find insight and enriching information related to Adaptive Robotic Arm Systems. Also, the community can also make a reference or future insight for their projects or works.

The research has successfully demonstrated the concept of an AI-assisted automation system that connects its control panel over the global internet via a cloud server and overcomes issues and challenges currently faced by such systems (Anwarul et al.2022). Since recent outputs of the envisioned AWS Cloud and AI-based Automated Robotic System may have significant industrial importance and potential technological capability from an R&D perspective, primary data collection via market surveys and industry interviews to assist some aspects of technological deepening. At this point, studies that investigate the potential strategic advantages of the presented AWS Cloud and AI-based Automated Robotic System in different industries and the possible results such a system can provide in terms of cost reduction, flexibility, and efficiency in different industrial applications are needed. Future research domain suggestions are presented below to better design new generation AWS Cloud and AI-based Automated Robotic Systems not only for the machines that help in post-harvest operations in several different agro-food applications but also for collaborative robot functions. The existing AWS cobots aim to address only a few post-harvest operations and/or machine-cobot interfaces in an interlinkage based on the initial sub-operations undertaken in these operations, taking into account state-of-the-art robotic norms and agro-robot norms and their interpretations (Mistry et al., 2024). However, as a new building block, novel suggestions could be developed in these complementary directions. Every novel research direction arises from the current state of the art presented with the AI-based system. However, it should be considered that the described aspects concerning the AWS Cloud modes and AI functions in all the present and future image-based scenarios within the AWS digital infrastructure should evolve in line with the demands of the user groups according to the establishment of a hybrid connection with the external world models.

References

Akerele, J. I., Uzoka, A., Ojukwu, P. U., & Olamijuwon, O. J. (2024). Improving healthcare application scalability through microservices architecture in the cloud. International Journal of Scientific Research Updates, 8(02), 100-109. researchgate.net

Borboni, A., Reddy, K. V. V., Elamvazuthi, I., AL-Quraishi, M. S., Natarajan, E., & Azhar Ali, S. S. (2023). The expanding role of artificial intelligence in collaborative robots for industrial applications: A systematic review of recent works. Machines, 11(1), 111. mdpi.com

Cob-Parro, A. C., Lalangui, Y., & Lazcano, R. (2024). Fostering Agricultural Transformation through AI: An Open-Source AI Architecture Exploiting the MLOps Paradigm. Agronomy. mdpi.com

Dixon, E. (2021). Solution Architectures. Demystifying AI for the Enterprise. [HTML]

Elfaki, A. O., Abduljabbar, M., Ali, L., Alnajjar, F., Mehiar, D. A., Marei, A. M., … & Al-Jumaily, A. (2023). Revolutionizing social robotics: a cloud-based framework for enhancing the intelligence and autonomy of social robots. Robotics, 12(2), 48. mdpi.com

Heremans, F., Evrard, J., Langlois, D., & Ronsse, R. (2024). ELSA: A foot-size powered prosthesis reproducing ankle dynamics during various locomotion tasks. IEEE Transactions on Robotics. [HTML]

Iannino, V., Denker, J., & Colla, V. (2022). An application-oriented cyber-physical production optimisation system architecture for the steel industry. IFAC-PapersOnLine. researchgate.net

Kommineni, M. (2022). Discover the Intersection Between AI and Robotics in Developing Autonomous Systems for Use in the Human World and Cloud Computing. International Numeric Journal of Machine Learning and Robots, 6(6), 1-19. injmr.com

Liu, S., Cheng, X., Yang, H., Shu, Y., Weng, X., Guo, P., … & Yang, Y. (2021, January). Stars can tell: A robust method to defend against gps spoofing using off-the-shelf chipset. In Proceedings of The 30th USENIX Security Symposium (USENIX Security). nsf.gov

Liu, Z. (2024). Service Computing and Artificial Intelligence: Technological Integration and Application Prospects. Academic Journal of Computing & Information Science. francis-press.com

Macenski, S., Foote, T., Gerkey, B., Lalancette, C., & Woodall, W. (2022). Robot operating system 2: Design, architecture, and uses in the wild. Science robotics, 7(66), eabm6074. science.org

Miraz, M. H., Ali, M., & Excell, P. S. (2021). Adaptive user interfaces and universal usability through plasticity of user interface design. Computer Science Review. [HTML]

Mistry, H. K., Mavani, C., Goswami, A., & Patel, R. (2024). The Impact Of Cloud Computing And Ai On Industry Dynamics And Competition. Educational Administration: Theory and Practice, 30(7), 797-804. researchgate.net

Novák, J. Š., Masner, J., Benda, P., Šimek, P., & Merunka, V. (2024). Eye tracking, usability, and user experience: A systematic review. International Journal of Human–Computer Interaction, 40(17), 4484-4500. tandfonline.com

Roy, S., Vo, T., Hernandez, S., Lehrmann, A., Ali, A., & Kalafatis, S. (2022). IoT security and computation management on a multi-robot system for rescue operations based on a cloud framework. Sensors, 22(15), 5569. mdpi.com

Saini, M., Sharma, K. & Doriya, R. An empirical analysis of cloud based robotics: challenges and applications. Int. j. inf. tecnol. 14, 801–810 (2022).

Wang, T., Joo, H. J., Song, S., Hu, W., Keplinger, C., & Sitti, M. (2023). A versatile jellyfish-like robotic platform for effective underwater propulsion and manipulation. Science Advances, 9(15), eadg0292. science.org

Yu Lei, Zhi Su, Chao Cheng. Virtual reality in human-robot interaction: Challenges and benefits[J]. Electronic Research Archive, 2023, 31(5): 2374-2408. doi: 10.3934/era.2023121

Surao, A. (2018). Design and Implementation of Plc Based Robot Control of Electric Vehicle. Mathematical Statistician and Engineering Applications, 67(1), 33–43. Retrieved from https://www.philstat.org/index.php/MSEA/article/view/2925

Surao, Anuj. (2021). Development Of Framework Design For Plc-Based Robot Vision In Waste Recycling Sorting. NeuroQuantology, 19(12), 774-782. Retrieved from https://www.neuroquantology.com/open-access/DEVELOPMENT+OF+FRAMEWORK+DESIGN+FOR+PLC-BASED+ROBOT+VISION+IN+WASTE+RECYCLING+SORTING_13260/

Surao, A. (2020). Development Of Bluetooth Based Pick And Place Robot Vehicle For Industrial Applications. Turkish Journal of Computer and Mathematics Education (TURCOMAT), 11(2), 1308–1314. https://doi.org/10.61841/turcomat.v11i2.14725

Anwarul, S., Misra, T., & Srivastava, D. (2022, October). An iot & ai-assisted framework for agriculture automation. In 2022 10th International Conference on Reliability, Infocom Technologies and Optimization (Trends and Future Directions)(ICRITO) (pp. 1-6). IEEE. [HTML]

Weike, M., Ruske, K., Gerndt, R., & Doernbach, T. (2024, March). Enabling Untrained Users to Shape Real-World Robot Behavior Using an Intuitive Visual Programming Tool in Human-Robot Interaction Scenarios. In Proceedings of the 2024 International Symposium on Technological Advances in Human-Robot Interaction (pp. 38-46). acm.org

Goel, M. M. (2024, March 11). Unveiling the perils of greedonomics [Audio podcast]. Acadnews. https://acadnews.com/unveiling-the-perils-of-greedonomics/

Goel, M. M. (2024, February 26). Needonomics: Queen of social sciences for global economy [Audio podcast]. Acadnews. https://acadnews.com/needonomics-queen-of-social-sciences-for-global-economy/

Goel, M. M. (2020, August 04). A view on higher education in New Education Policy 2020. TheRise.co.in.

Goel, M. M. (2002). Excellence models for teachers in the changing economic scenario. University News, 40(42), 1–4. ISSN 0566-2257.

Goel, M. M. (2019). Perceptions on Draft National Education Policy-2019. University News, 57(29), 1–5. ISSN 0566-2257.

Goel, M. M. (2020, July 09). Relevance of Needonomics for revival of global economy. Dailyworld. Also published in The Asian Independent on July 07. https://theasianindependent.co.uk/

Goel, M. M. (2020, May 30). Common sense approach needed for facing Covid-created challenges. The Asian Independent. https://theasianindependent.co.uk/

Goel, M. M. (2012). Economics of human resource development in India.

Goel, M. M. (2011). Relevance of Bhagavad Gita. Korean-Indian Culture, 18, 1–10.

Goel, M. M. (2014, September 21). Old text, modern relevance on ‘Anu-Gita.’ Spectrum.

Goel, M. M. (2015, April 12). Worship is work and vice versa. Daily Post.

Goel, M. M. (2012, April 30). Work is worship and vice versa. The Korea Times.